AI's Infinite Money Loop

The NVIDIA–Oracle–OpenAI triad showcases an “AI money loop” in which long-dated compute contracts, vendor financing, and lease-back structures recycle capital, inflate valuations, and concentrate counterparty risk. This article explains the mechanics behind that loop, examines how it distorts macro indicators and shifts data, labor, and environmental costs onto the public, and outlines targeted reforms to realign incentives with genuine productivity.

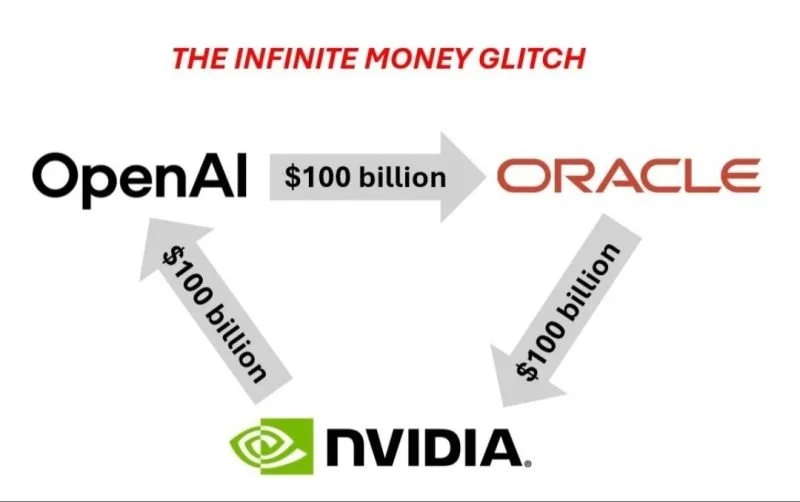

In September 2025, Oracle announced a $300 billion, five-year cloud contract with OpenAI—the largest computing deal in history. Beginning in 2027, the deal demands 4.5 gigawatts of electricity, enough to power four million U.S. homes. By 2028, OpenAI will be paying Oracle nearly $60 billion annually for compute—the rented processing power needed to train and run AI models. Days after the Oracle-OpenAI deal, NVIDIA revealed a letter of intent to invest up to $100 billion in OpenAI. The letter was structured so that OpenAI would recycle much of this capital back into NVIDIA hardware. The letter came only months after Oracle committed $40 billion to purchase NVIDIA GPUs (graphing processing units) for OpenAI’s Stargate data center in Texas, which will be leased back to OpenAI. Of this $100 billion, NVIDIA has committed the first $10B, but the details on the remaining $90 billion are unknown, and perhaps the ambiguity is intentional. Why does this matter? It creates, what journalists have dubbed, an “infinite money glitch.”

The question for economists is not whether this is eye-popping, but how the underlying mechanisms work, what they do to balance sheets and incentives, and where risks and costs ultimately land.

How the loop actually functions:

The loop begins with long-dated compute contracts. OpenAI, or any model developer, commits to multi-year purchases of GPUs and cloud capacity, often with take-or-pay or capacity-reservation features. For Oracle, or any cloud provider, these obligations translate into “revenue visibility” that justifies new data-center buildouts and supports richer valuations. For the buyer, they signal privileged access to scarce compute, which is helpful both operationally and for fundraising. The accounting optics improve on both sides before diversified end-user demand materializes at scale.

A second mechanism narrows the cycle: vendor financing and related-party equity. When a supplier—or investors aligned with that supplier—provides capital to the buyer, cash exits the supplier ecosystem as an investment and returns as revenue when the buyer spends on hardware and services. Order books fill, “run-rate” revenue rises, and private marks often follow. This is not necessarily improper; it is, however, endogenous. The same ecosystem that funds the customer is booking the customer’s spend.

A third mechanism converts lumpy capex into smoother income statements. Through special-purpose vehicles and lease-back arrangements, data-center assets can be moved off a buyer’s balance sheet and reappear as predictable operating expenses. Suppliers record steady utilization; buyers show cleaner earnings paths. Yet the underlying exposure remains concentrated. Payments still depend on a small set of counterparties and the same cycle governing AI funding and compute prices.

Finally, valuation and capacity reinforce each other. As private marks and contracted backlogs climb, participants scale infrastructure, which increases fixed obligations, which then require ever-higher valuations or new rounds of financing to keep the machine solvent. Price signals become partially self-referential: optimism begets capacity; capacity begets obligations; obligations beget more optimism—until an external shock, a funding squeeze, or a performance disappointment breaks the chain.

What the numbers obscure:

On paper, this architecture looks robust: large backlogs, full utilization, “sticky” revenue. In cash terms, it is more fragile. Purchase commitments behave like debt by another name: obligations arrive regardless of realized demand. Suppliers accrue counterparty concentration because a few buyers account for a large share of sales; buyers accrue vendor dependence because a few suppliers account for a large share of costs. The same tight linkages that amplify the upswing can transmit stress in reverse when capital costs rise or when product monetization lags model hype.

Accounting treatment can widen the perception gap. Long-dated reservations support confident visibility narratives even when free cash flow remains negative; lease-backs smooth earnings while embedding economic leverage in minimum payments. Because financing often originates inside the same ecosystem that recognizes the resulting revenue, standard performance signals (backlog, utilization, run-rate) are less independent than they appear.

Where the risks sit:

For buyers, the principal risk is liquidity against fixed obligations. If end-market revenue underperforms, capacity commitments still come due, and refinancing becomes harder precisely when markets reassess risk. For suppliers, the risk is correlated exposure: the same handful of customers that drive expansion also concentrate receivables and capacity planning. System-level risk arises from feedback: if a large node stumbles, the effect propagates across financing, orders, and valuations because the nodes are financially interwoven.

External costs the loop doesn’t price:

The balance-sheet gains depend on inputs whose true costs are shifted elsewhere. Training corpora often incorporate proprietary journalism, books, images, and audio; when disputes end in settlements that are immaterial relative to firm valuations, “pay later” remains cheaper than licensing and verifiable provenance “up front,” undercutting the industries that supply the raw material. Labor markets bear rapid task automation before reskilling systems adjust, pushing income shocks onto households and public programs. Multi-gigawatt data centers impose large electricity and water demands, with costs that surface as higher utility rates, grid upgrades, and environmental strain rather than as line items on corporate income statements. In each case, private returns benefit from public or third-party subsidies that go largely unmeasured in firm-level metrics.

Policy: align incentives with the actual architecture:

The objective is not to slow innovation but to ensure that profits reflect genuine productivity rather than circular finance and off-loaded costs. Three levers match the mechanisms just described.

First, scale penalties with size and repetition. Remedies for data misuse and unsafe deployment should tie to global turnover or market capitalization, with repeat-offender multipliers and executive-compensation clawbacks. When violations cannot be absorbed as marginal costs of doing business, “build fast, pay later” loses its financial edge over licensed, auditable data practices.

Second, require auditable provenance and model-risk gating for access to large-scale compute. Tamper-evident records of training data, independent safety audits, incident reporting, and post-deployment monitoring convert safety from a public-relations exercise into a priced operational requirement. Public subsidies—tax credits, discounted power, preferential siting—should hinge on clearing these thresholds, not just on building capacity.

Third, surface circular exposures and hidden leverage. Standardized disclosures should spell out take-or-pay and capacity-reservation terms, related-party investments and credit, and SPV/lease-back obligations, accompanied by counterparty-concentration tables and stress tests that show liquidity coverage if top customers cut spend. Making endogeneity visible restores the market’s ability to price risk independently of the loop.

Complementary steps can internalize environmental and labor externalities. Tariff structures indexed to local water scarcity and grid congestion, plus incentives limited to facilities that provide demand-response capability and heat-reuse, bring infrastructure costs onto the same ledger as private returns. Where measurable productivity gains accrue from automation, a small levy on large compute purchases or AI-linked revenue can finance portable benefits, wage insurance, and training credits that scale automatically with deployment.

Conclusion:

The NVIDIA–Oracle–OpenAI triad is a vivid hook, but the deeper story is structural: long-dated obligations that resemble debt, vendor-funded demand, off-balance-sheet smoothing, and valuation feedbacks that can run in both directions. The architecture produces impressive optics while concentrating risk and shifting costs outward. Pricing the architecture (through scaled penalties, verifiable provenance and safety gating, and disclosure that exposes circular finance) does not slow progress. It redirects investment toward durable growth, where independent demand, not a hall of mirrors, supports the next wave of AI.